Table of Contents

XPM project

eXplainable Predictive Maintenance (XPM)

- Project Coordinator: Slawomir Nowaczyk (Halmstad University)

- Partners: Slawomir Nowaczyk (HU-Halmstad University, Sweden) Founder: VR; João Gama (IT-Inesc Tec, Portugal) Founder: FCT; Grzegorz J. Nalepa (JU-Jagiellonian University, Poland) Founder: NCN; Moamar Sayed-Mouchaweh (IMTLD-France IMT Lille-Douai) Founder: ANR

- Start time: 01.03.2021

- End time: 28.02.2023

- Duration: 24 months

- chistera www: external webpage

Summary

The XPM project aims to integrate explanations into Artificial Intelligence (AI) solutions within the area of Predictive Maintenance (PM). Real-world applications of PM are increasingly complex, with intricate interactions of many components. AI solutions are a very popular technique in this domain, and especially the black-box models based on deep learning approaches are showing very promising results in terms of predictive accuracy and capability of modelling complex systems.

However, the decisions made by these black-box models are often difficult for human experts to understand – and therefore to act upon. The complete repair plan and maintenance actions that must be performed based on the detected symptoms of damage and wear often require complex reasoning and planning processes, involving many actors and balancing different priorities. It is not realistic to expect this complete solution to be created automatically – there is too much context that needs to be taken into account. Therefore, operators, technicians and managers require insights to understand what is happening, why it is happening, and how to react. Today’s mostly black-box AI does not provide these insights, nor does it support experts in making maintenance decisions based on the deviations it detects. The effectiveness of the PM system depends much less on the accuracy of the alarms the AI raises than on the relevancy of the actions operators perform based on these alarms.

In the XPM project, we will develop several different types of explanations (anything from visual analytics through prototypical examples to deductive argumentative systems) and demonstrate their usefulness in four selected case studies: electric vehicles, metro trains, steel plant and wind farms. In each of them, we will demonstrate how the right explanations of decisions made by AI systems lead to better results across several dimensions, including identifying the component or part of the process where the problem has occurred; understanding the severity and future consequences of detected deviations; choosing the optimal repair and maintenance plan from several alternatives created based on different priorities; and understanding the reasons why the problem has occurred in the first place as a way to improve system design for the future.

Objectives

The project has two primary objectives. The first one is to develop novel methods for creating explanations for AI decisions within the PM domain (O1). There is a heated discussion in the literature today, about the right outlook towards XAI: should it be based on an extra layer to be added on top of existing black-box models, or achieved through the development of inherently interpretable (glass) models. A lot of work is being done in both these areas. We believe that both approaches have merit, but there are too few honest comparisons between them. Therefore, in the XPM project we plan to, within this first general objective, pursue the following two specific sub-objectives (SO):

- SO1a: Develop a novel post hoc explainability layer for black-box PM models

- SO1b: Develop novel algorithms for creating inherently explainable PM models

The second project objective is to develop a framework for evaluation of explanations within the XPM setting (O2). We believe that such a framework can subsequently be generalised to other domains, beyond the scope of XPM. Within this context, we have identified two specific sub-objectives:

- SO2a: Propose multi-faceted evaluation metrics for PM explanations

- SO2b: Design an interactive decision support system based on explainable PM

There is a need for more specific evaluation metrics, especially Functionality- and Human- Grounded ones, within the domain of PM. In particular, there are currently no solutions capable of capturing the needs of different actors, based on their individual competence level and specific goals. Still, within any given industry, multiple stakeholders need to interact with a PM system, often for different reasons. Understanding their needs and making sure each receives the right support is critical. Moreover, for Application-Grounded evaluation, it is crucial to understand how AI decisions and their explanations affect the planning and optimisation tasks that human experts use to create repair plans. To this end, we will build a decision support system that, based on the patterns detected by AI and augmented with explanations, provides different stakeholders with tools to create and update maintenance and repair plans. This will allow us to accurately measure the effect these explanations have on the final result.

In order to achieve these objectives and evaluate their impact within the duration of the project, the methods we develop will be tested and demonstrated in four different PM use cases across disparate industries. Each member of the XPM consortium has prior results in the analysis of industrial data in the PM context, including [sm,sa,fp]. Moreover, all have well-established collaboration with industrial partners interested in the PM area and the XPM project itself. Letters of interest from them are attached and available online.

Expected Impacts

The most important scientific impacts we foresee are as follows:

- development of novel post hoc explainability layer for black-box PM models, supporting their proper selection and parameterisation (related to SO1a),

- development of new glass models in PM, i.e., inherently explainable models, incorporating domain expert knowledge (related to SO1b),

- formulation of new metrics for explainable PM models, combining quantitative performance measures with qualitative human expert evaluation (related to SO2a),

- linking the explanations to the decision support system through the design of an interactive human-machine tool (SO2b)

At the industrial level, our methods, suitable for AI systems currently deployed, will lead to:

- an improved decision-making process in the industries currently using black-box PM, through explanations supporting the creation of maintenance plans,

- increased awareness of the pros and cons of glass models as an alternative to black-box ones,

- more efficient maintenance methods through a better understanding of the product life cycle, as well as how different factors are affecting its lifetime.

At the societal level, we expect impact related to improved trustworthiness of AI systems in the industrial context resulting from:

- increasing their understandability on the technical level,

- legal analysis of liability norms related to the development and operation of the AI systems,

- elaboration of guidelines, standards and criteria for the purpose of evaluation of the AI systems and their certification,

- the integration of human expert-based decision making with the AI operation.

Project structure

Consortium

- HU-Halmstad University, Sweden, PI: Slawomir Nowaczyk, Founder: VR

- IT-Inesc Tec, Portugal, PI: João Gama, Founder: FCT

- JU-Jagiellonian University, Poland, PI: Grzegorz J. Nalepa, Founder: NCN

- IMTLD-France IMT Lille-Douai, PI: Moamar Sayed-Mouchaweh, Founder: ANR

Work packages

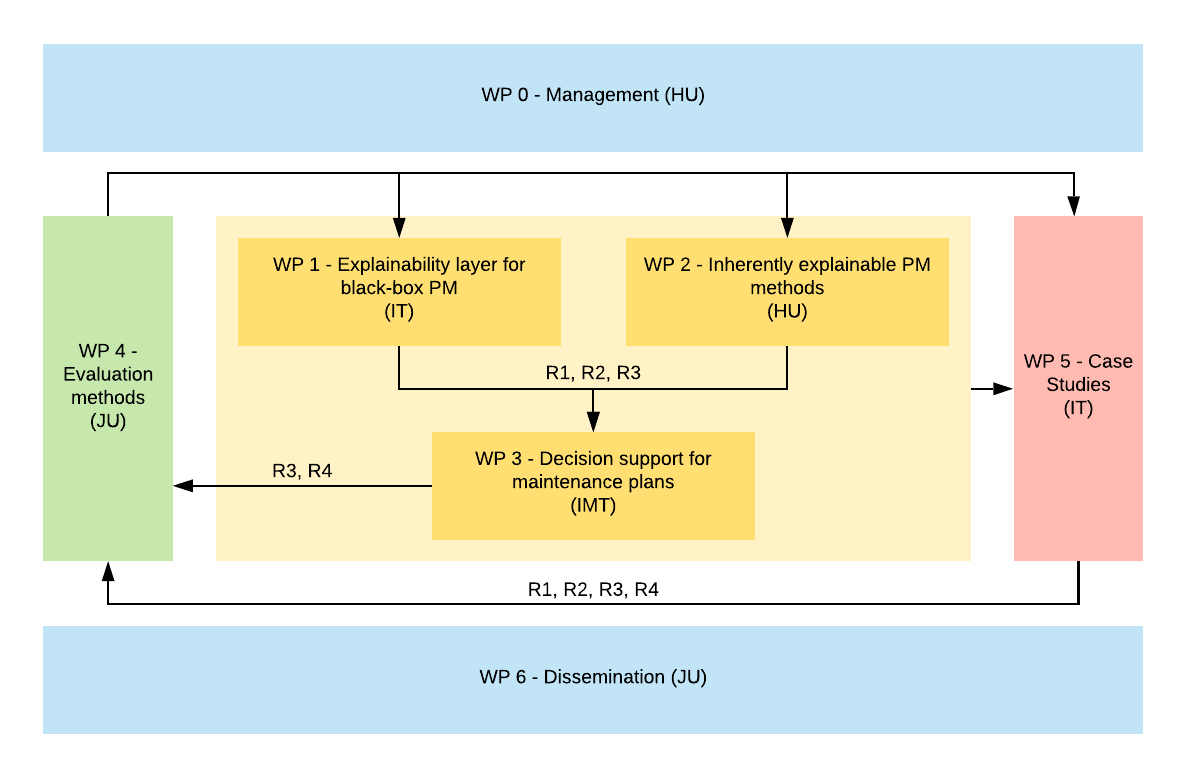

The workplan included 7 work packages, further partitioned into tasks, briefly described below.

WP0: Management The Management WP aims to ensure the proper execution of the project, early detection of any deviations and efficient way of finding solutions to issues discovered.

WP1: Explainability Layer for Black-box PM In this work package, we will develop an explainability layer for black-box models that include different aspects of prediction and self-adaptive modelling (SO1a). We will identify the proper context of explanations in PM in order to effectively interact with different actors from system operators to management staff. The outputs of this WP are, for each alarm, the location of the failure and root cause analysis, the severity and RUL, and expected impact on component performance.

WP2: Inherently Explainable PM Methods In this work package, we will propose new methods for PM that are developed with explainability in mind and produce glass models (SO1b), by continuously involving domain experts during the model building process. We will use frequent feedback on interpretability and demonstrate how transparent and understandable models can be linked with the underlying data from the industrial monitoring systems. Through the top-down perspective, from domain knowledge to the data (as opposed to WP1 which follows the bottom-up approach), we will introduce the expert knowledge into the PM modelling process itself to better understand and explain industrial data. The outputs match WP1: failure location and root cause analysis (R1), severity and RUL (R2) and impact on component performance (R3).

WP3: Decision Support for Maintenance Plans This work package aims at understanding the physics of degradation (e.g., wear, cracks, clogging, corrosion) with respect to failure modes, their propagation mechanisms and the variables (e.g., operational, environmental, load) that are involved in the phenomena. By identifying key features and influencing factors on the critical components and the dynamic evolution of their degradation (R2), we develop an interactive decision support tool and HMI for prescriptive maintenance advice. It uses explanations supplied by models from WP1 and WP2 to present the degradation characteristics and explain their impacts on the selected performance criteria in the correct context (R3). Thus, it helps operators to understand the situation, create a suitable repair and maintenance plan (R4).

WP4: Evaluation methods As explainability is always domain, context and task-dependent, it is challenging to measure on a general level. Therefore, in this WP, we will formulate a range of evaluation methods for explanations relevant for XPM (SO2a). Based on them, we will be able to assess the usefulness of explanations in the specific cases, as well as their contribution to the decision-making process in a broad sense.

WP5: Case studies In each case study, the effort will be shared between the leading partner and an additional partner. The four case studies will provide the necessary testbed diversity to assess the generality of results in the light of XPM objectives, as well as valuable feedback. This WP will also bring to XPM sector-wise domain knowledge that is fundamental to many future applications of XPM. We will use these scenarios to demonstrate the contributions of the project. THese will include: steel factory (JU), Electric heavy-duty vehicles (HU), Wind farms (IMTLD), Metro Porto (IT-Inesc Tec)

WP6: Dissemination The goal of this package is to raise awareness about the XPM project and to boost interest in it in the related scientific communities and among different actors, including industry stakeholders. Furthermore, the objective is to disseminate knowledge to research and development teams beyond the project consortium.

Dependencies

Project team

- HU-Halmstad University, PI: Slawomir Nowaczyk, Sweden, PI:

- IT-Inesc Tec, Portugal, PI: João Gama, Founder: FCT

- JU-Jagiellonian University, Poland, PI: Grzegorz J. Nalepa, Founder: NCN

- IMTLD-France IMT Lille-Douai, PI: Moamar Sayed-Mouchaweh, Founder: ANR

HU

- Principal Investigator: Slawomir Nowaczyk

- Co-investigators: Thorsteinn Rögnvaldsson, Sepideh Pashami

- Supporting investigators: Aneta Napieraj

- PhD Students:

IT

- Principal Investigator: João Gama

- Co-investigators: Rita P. Ribero, Bruno Veloso

- Supporting investigators: João Vinagre, Narges Devari

- PhD Students:

JU

- Principal Investigator: Grzegorz J. Nalepa

- Co-investigators: Szymon Bobek, Michał Araszkiewicz

- Supporting investigators: Przemysław Stanisz

- PhD Students: Jakub Jakubowski, Michał Kuk, Maciej Szelążek, Radosław Pałosz,

IMTLD

- Principal Investigator: Moamar Sayed-Mouchaweh,

- Co-investigators: Lala Rajaoarisoa

- Supporting investigators:

- PhD Students:

Papers

- Davari, N., Veloso, B., Costa, G.D.A., Pereira, P.M., Ribeiro, R.P. and Gama, J., 2021. A Survey on Data-Driven Predictive Maintenance for the Railway Industry. Sensors, 21(17), p.5739., https://doi.org/10.3390/s21175739, https://www.mdpi.com/1424-8220/21/17/5739

- Davari, N., Veloso, B., Ribeiro, R.P., Pereira, P.M. and Gama, J., 2021, October. Predictive maintenance based on anomaly detection using deep learning for air production units in the railway industry. In 2021 IEEE 8th International Conference on Data Science and Advanced Analytics (DSAA) (pp. 1-10). https://doi.org/10.1109/DSAA53316.2021.9564181 https://www.researchgate.net/publication/355463810_Predictive_maintenance_based_on_anomaly_detection_using_deep_learning_for_air_production_unit_in_the_railway_industry

- Davari, N., Pashami, S., Veloso, B., Nowaczyk, S., Fan, Y., Pereira, P.M., Ribeiro, R.P. and Gama, J., 2022, A fault detection framework based on LSTM autoencoder: a case study for Volvo bus data set. IDA2022. https://doi.org/10.1007/978-3-031-01333-1_4 https://www.researchgate.net/publication/359939634_A_fault_detection_framework_based_on_LSTM_autoencoder_a_case_study_for_Volvo_bus_data_set

- Sant’Ana, B., Veloso, B., and Gama, J., 2022, Predictive maintenance for wind turbines, 5th International Conference on Energy and Environment: bringing together Engineering and Economics, accepted, waiting for publication

- FAULT DETECTION IN WIND TURBINES, Beatriz Sant’Ana Real Pereira Master Thesis in Data Analytics, Univ. Porto, 2021, https://repositorio-aberto.up.pt/handle/10216/136624

- S. Bobek, P. Bałaga, and G. J. Nalepa, Towards model-agnostic ensemble explanations, ICCS2021, Springer, 2021. https://doi.org/10.1007/978-3-030-77970-2_4 https://www.researchgate.net/publication/352286139_Towards_Model-Agnostic_Ensemble_Explanations

- S. Bobek and G. J. Nalepa, Introducing uncertainty into explainable ai methods, ICCS2021, Springer 2021. https://doi.org/10.1007/978-3-030-77980-1_34 https://www.researchgate.net/publication/352254836_Introducing_Uncertainty_into_Explainable_AI_Methods

- J. Jakubowski, P. Stanisz, S. Bobek, and G. J. Nalepa, Explainable anomaly detection for hot-rolling industrial process, DSAA2021, IEEE, 2021. https://doi.org/10.1109/DSAA53316.2021.9564228 https://www.researchgate.net/publication/355460527_Explainable_anomaly_detection_for_Hot-rolling_industrial_process?ev=project

- J. Jakubowski, P. Stanisz, S. Bobek, and G. J. Nalepa, Anomaly detection in asset degradation process using variational autoencoder and explanations, Sensors 22(1): 291 (2022) https://doi.org/10.3390/s22010291 https://www.mdpi.com/1424-8220/22/1/291

Project-related events

- 2022: Practical applications of explainable artificial intelligence methods (PRAXAI2022), at the 9th IEEE International Conference on Data Science and Advanced Analytics http://praxai.geist.re

- 2022: The Semantic Data Mining (SEDAMI) Workshop at ECML-PKDD http://sedami.geist.re

- 2022: ECML/PKDD 2022 Workshop on IoT Streams for Predictive Maintenance https://abifet.wixsite.com/iotstream2022

- 2021: Special Issue of the Open Access MDPI Sensors journal “Machine Learning from Heterogeneous Condition Monitoring Sensor Data for Predictive Maintenance and Smart Industry” https://www.mdpi.com/journal/sensors/special_issues/predictive_maintenance_sensor

- 2021: DSAA2021, and the special session on Data-Driven Predictive Maintenance for Industry 4.0 (XPDM 2021) https://sites.google.com/g.uporto.pt/ddpdm2021/home

- 2021: DSAA2021 summer school https://hh.se/PMSummerSchool

- 2021: Kaggle Challenge concerning predicting the correct configuration of the billing system based on CRM configurations https://www.kaggle.com/c/systemreconciliation

- 2020: 2nd ECML/PKDD 2020 Workshop on IoT Streams for Data Driven Predictive Maintenance: https://abifet.wixsite.com/iotstream2020

Tools and Datasets

- Open-source implementation of Local Uncertain Explanations was created and made accessible at: https://github.com/sbobek/lux

- As a result of work on the metrics of XAI, an InXAI prototype software was created and made available as an open-source tool at: https://github.com/sbobek/inxai

Go back to → projects